With ILTIS GmbH

ILTIS consults enterprises and communal facilities on process optimization.

Partnering with Codesphere allowed the rapid development of new applications to optimize their

workflows.

From PoC to Cross Customer Rollout in < 6 Weeks

Iltis partnered with Codesphere to implement and deploy a speech recognition and

knowledge retrieval use case based on the latest open source AI technologies.

Empowered by Codesphere's ease of use and ability to scale, this PoC project is now used

as a blueprint to scale out the solution across other Iltis customers.

Realtime Speech Recognition

Semantic Search

ERP Integration

Operator Cockpit UI

6 Weeks

Total implementation time

2000+

Documents embedded for Vector DB

100%

GDPR compliant

Composable Architecture

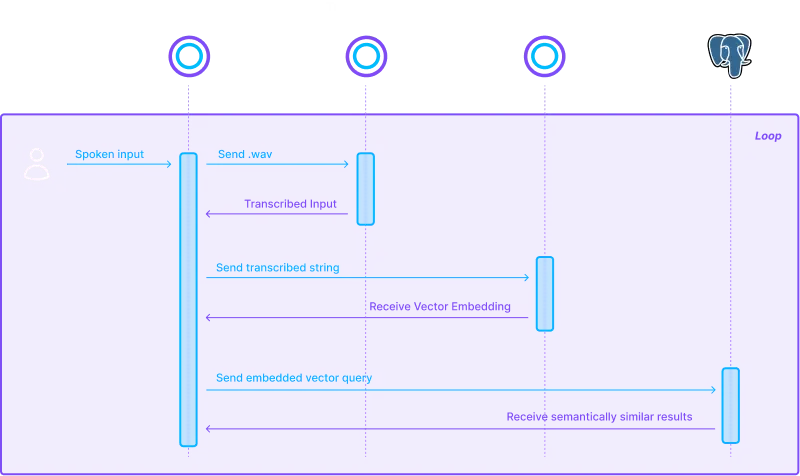

The application consists of the transcription backend, frontend and sentence transformer which creates numerical representations of the transcribed data (embeddings) to store in and query a vector database. Everything hosted on Codesphere.

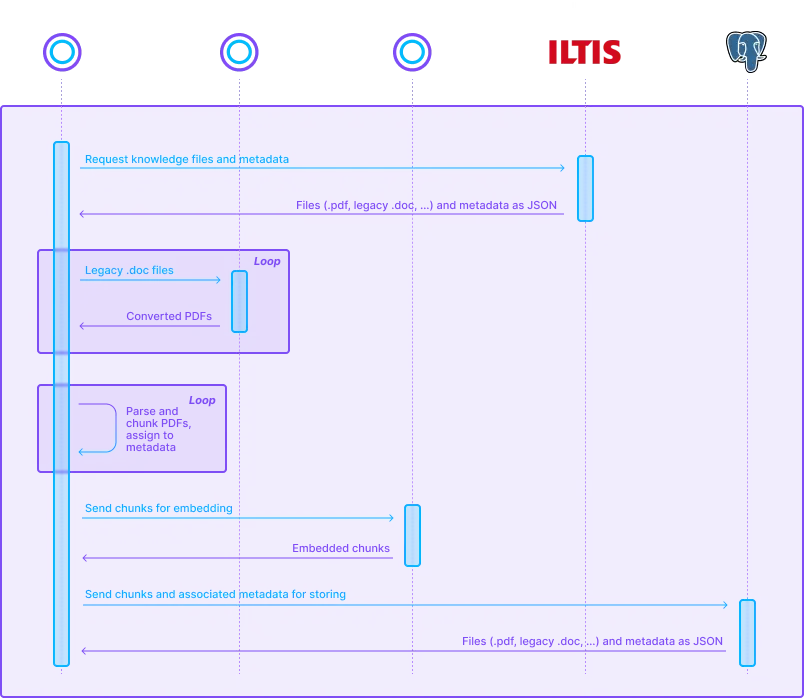

Sophisticated Ingestion

To make ILTIS’ knowledge available to their experts, we pulled all of their data from their ERP and ran it through a sophisticated ingestion pipeline that converts everything into the right format, chunks the data accordingly and uploads everything to the database. Everything happens fully automated.

Alexander Ott

Geschäftsführer @ ILTIS

Together with Codesphere, we developed a working PoC in just 6 weeks, which allowed us to test and gather feedback incredibly quickly.

Open Source Stack

Frontend

The frontend runs a basic Sveltekit application, taking care of recording the microphone input and sending it as a .wav to the transcription server.

Transcription Server

A Whisper.cpp server running on a Codesphere Pro plan, enabling real time CPU inference for speech to text. No GPU needed, keeping the cost down

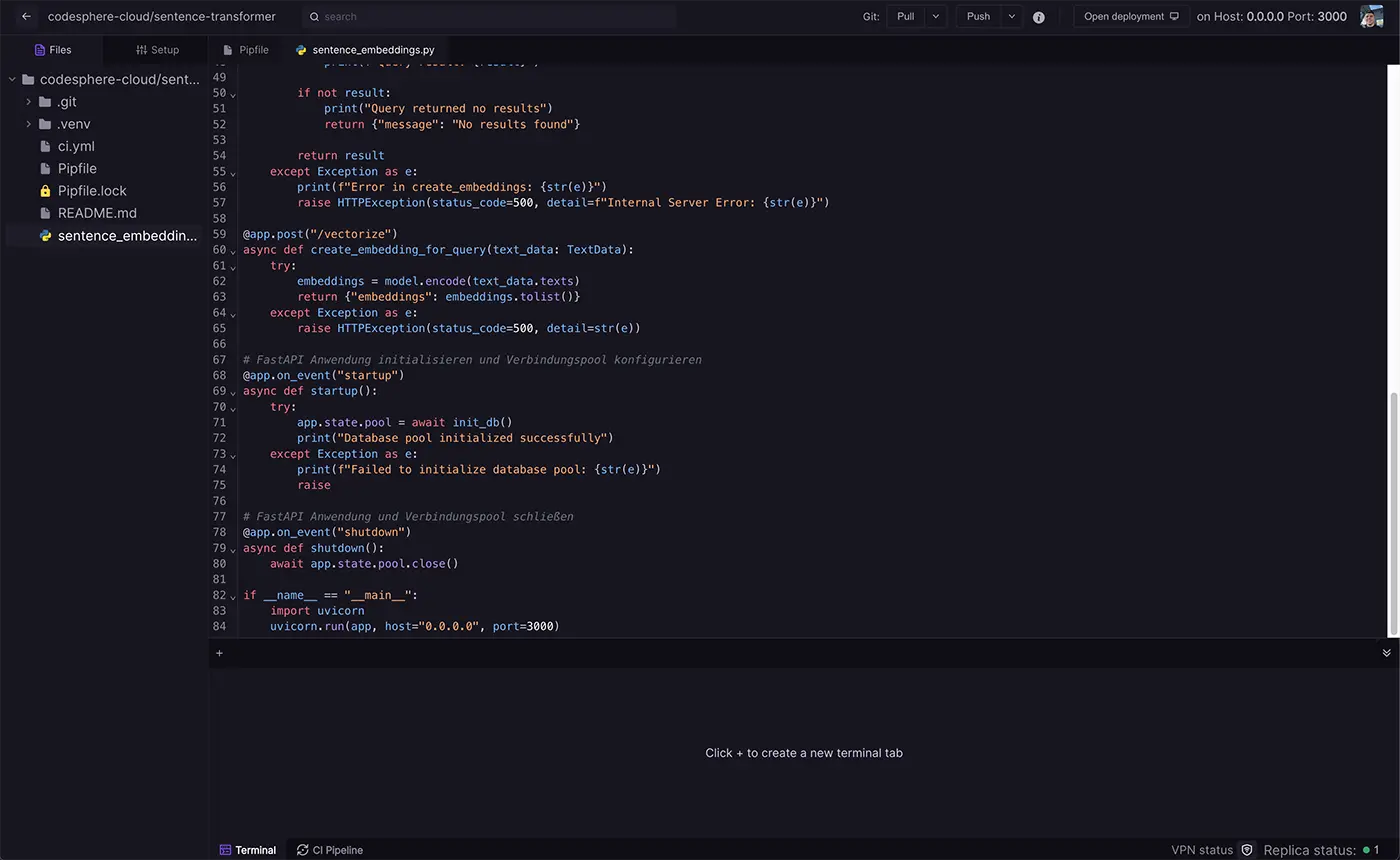

Sentence Transformer

A FastAPI server running the sentence-transformer library to create the embeddings needed to store and retrieve ILTIS data from the database

PostgreSQL + pgvector

A PostgreSQL database with the pgvector extension which extends the stored information with vector embeddings and implements a rapid vector search, perfect for RAG use cases

Data Ingestion Pipeline

Node.js server that pulls data from the ERP, parses all PDFs, creates embeddings and checks and fills the .

PDF Server

Sterling PDF endpoint that takes in legacy .doc files and converts them into PDF files that can be parsed by the main Node server.

Freedom in Deployment & Development

The initial structure of the application was developed locally using standard IDEs. Since

Codesphere strips away complex Ops setups and simplifies proxies and path-based routing, making

CORS issues an issue of the past, preview deployments for all stakeholders to see were deployed

easily.

Final tweaks were done right from the Codesphere IDE, stripping away the need for endless git

push and pulls in the final stages of the app development.

Fully GDPR Compliant

Through using an open source and self hosted stack, no data ever leaves the application and its components. Since Codesphere offers servers in Frankfurt, Germany, everything can be hosted within the EU, making it GDPR compliant out of the box.

Get in touchwith Codesphere

Zero-Config

All the cloud provisioning, container orchestration and networking is handled by Codesphere out of the box. You can deploy a running AI model in less than two minutes.

Deploying AI apps on Codesphere is really straightforward.

Helmuth Bederna

Tech Lead @ Medmastery

Reactives: A New Deployment Category

Our patented deployment category, Reactives, surpasses serverless,

buildpacks, Docker PaaS, k8s, and VMs in ease of use and deployment speed, while supporting

enterprise-level complexity.

It excels with high-resource applications like LLMs, simplifying scaling to zero for low-traffic

apps and enhancing efficiency when scaling up by provisioning resources ad-hoc, potentially

reducing infrastructure costs by 50% or more.

Reactive Startup

Eliminate Expertise Bottlenecks

Codesphere takes away the need for dedicated Ops teams, enabling any developer to deploy

performant and efficiently

scaling AI applications without DevOps or MLOps experience.

This eliminates the expensive and time consuming setup & maintenance of

infrastructure as well as traditional challenges associated with scaling.

Learn more or get in touch now!

To get you started right now, we have made some of our training- and informational materials available for you to download below.

Get in touch