Can a chatbot help to communicate our USPs?

Can we build a chatbot that answers questions like 'How is Codesphere different from Vercel?' reliably enough? Let's find out.

Table of Contents

With the release of ChatGPT and the general excitement about generative AI that followed, chatbots have also received a new hype. The previous generation of chatbots fell short of the expectations and some already called the medium dead. Well it seems like they were wrong.

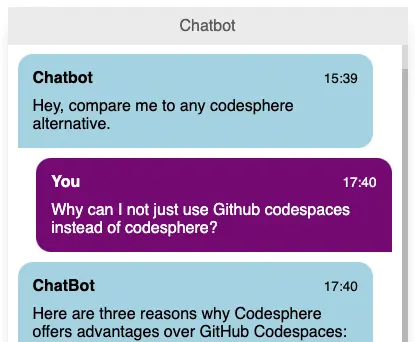

Today we will attempt to use a chatbot to solve a key problem many saas startups face - the more complex the product - the harder it is to explain what it does and how it differs from competitors. Can we teach the required knowledge to a LLM model and build a chatbot that answers questions of the type How is Codesphere's service different from Vercel? reliably enough to later embed it into a landing page? Let's find out.

Our example chatbot will have less than 500 lines of code and will be implemented using Python and Flask as the web server. Regarding the model, we have two options available:

1. Create a custom model:

- It will be specifically trained for our specific use case.

- The training process can be expensive.

- We need to create the dataset ourselves.

2. Use a multifunctional pre-trained model like chatGPT:

- It is already trained.

- It requires a small fee for usage.

- It is not specifically trained for our use case.

- The model's training data only goes up to a certain date.

For now we will use OpenAI's chatGPT api as it's super easy to get started, making it great for anyone following along.

Code: https://github.com/codesphere-cloud/usp-chatbot-blog

Live demo (subject to the availability of our API credits): https://41764-3000.2.codesphere.com/

You can also deploy a free workspace in Codesphere with a single click. If you haven't create a free account: https://signup.codesphere.com/

Then use this to deploy directly: https://codesphere.com/https://github.com/codesphere-cloud/usp-chatbot-blog

We are already looking into testing the same use case with a self hosted Llama2 in Codesphere - stay tuned for that in one of our upcoming blog posts.

Zero config cloud made for developers

From GitHub to deployment in under 5 seconds.

Review Faster by spawning fast Preview Environments for every Pull Request.

AB Test anything from individual components to entire user experiences.

Scale globally as easy as you would with serverless but without all the limitations.

Development of the user interface

Our website requires a form through which users can ask questions to the chatbot. This can be easily achieved by using an HTML form. Then, we'll use JavaScript to intercept the form submission. The user's question will be inserted into a HTML template and added to the chat history. Subsequently, we'll send a GET request to the API, and the received answer will be appended to the chat history in the same way.

Python script:

app = Flask(__name__)

app.static_folder = 'static'

@app.route("/")

def home():

now=datetime.now()

time=now.strftime("%H:%M")

return render_template("index.html", time=time)

@app.route("/get")

def get_bot_response():

userText = request.args.get('msg')

response = chatbot_response(userText)

return response

if __name__ == "__main__":

port=int(os.environ.get("PORT", 3000))

app.run(host="0.0.0.0", port=port)The first function of the web server is to display the interface to the user using an HTML file. We'll add the current time to the webpage so that the first message from the chatbot will have the current timestamp. The second function is responsible for receiving the question asked by the user and sending back the chatbot's response. In the third line, we get the question from the GET request. This question will then be passed to the chatbot_response function, which generates the answer. Finally, we send the generated answer back to the user.

Generating the response:

f=open("onepager_developer.txt", "r")

onepager_developer=f.read()

f.close()

def chatbot_response(question):

input_data=[

{"role": "system", "content" : str("You are a chatbot on codespheres website.You need to compare codesphere with alternatives in 3 short bullet points. With the following context:\n"+onepager_developer)},

{"role":"user", "content": str(question)}]

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages = input_data

)

response_gpt=json.loads(str(completion))

data=response_gpt["choices"][0]

reason_to_stop=data["finish_reason"]

if reason_to_stop == "stop":

response=data["message"]["content"]

response=response.replace("\n", "<br>")

else:

response="An error occurred."

return responseFor this project, we will use the "gpt-3.5-turbo" model from OpenAI. First, we need to install the library with the command pipenv install openai Next, we provide the library with our API key to make requests. We create a conversation list to provide additional information to the model. This allows us to overcome the limitation of the model not being specifically trained for this application and lacking information that occurred after the cut-off date. In the conversation list, we first tell the model how to respond and what the response should look like.

Then, we provide the model with information about our topic, in this case, Codesphere. After that, the user's question is included in the conversation, presented to the model with the role user We inform the OpenAI library about the model we want to use and provide the previously generated data, so the model has more information to generate the response from. Since the response is returned in JSON format, the string is converted to JSON for easy access to the data.

Processing the JSON response:

If the model returns stop as the finish_reason, it means no errors occurred, and we can extract the message. However, if an error occurs during the generation, the response will be An error occurred.. To display the answer correctly on the webpage, we use <br> to create a new line instead of \n. This is replaced using the replace function.

Improving the responses:

The answers from OpenAI are still relatively generic. To improve them, we place a context providing txt file into the repository. ChatGPT or better our chatbot will use the context provided in this file quite literally, we had to play around with a few version to get somewhat acceptable results, and we believe there is still a lot of room for improvement here. The better our context - the better our results presented as facts by our chatbot friend.

The UI design would also need to be improved before actually showing this on a landing page, but that's a topic for another post I'd say.

Conclusion:

Creating a chatbot is not as difficult as one might think, especially when using pre-trained models. Although these models may not have precise knowledge and have a certain cut-off-day, they can still provide good answers when operating within the given Information.

Happy coding!