Self-host AI interface builder OpenUI on Codesphere

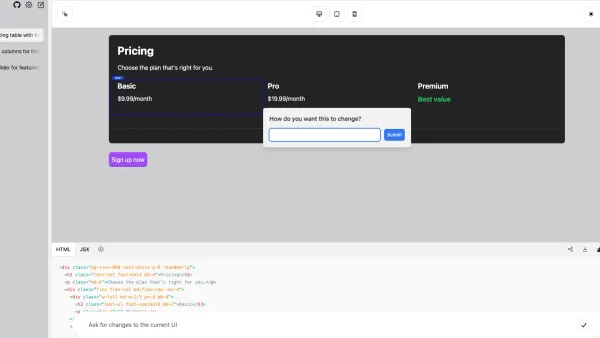

Self host an AI that builds and let's you interactively edit UI elements with natural language prompts? Sign us up!

Table of Contents

- Step 1: Create workspace

- Step 2: Install Ollama

- Step 3: Clone OpenUI Repository

- Step 4: Install required Python version and configure virtual environment env variables

- Step 5: Install dependencies for OpenUI

- Step 6: Adjust port and host

- Step 7: Download model & run Ollama server

- Step 8: Run the UI builder and enjoy!

AI tools helping with any and all parts of software development have been popping up left and right. The promise replacing coding tasks that are typically both mundane and require a learning curve is very appealing.

With OpenUI you can create frontends in most common programming languages i.e. HTML, React, Svelte, Vue or web components with simple natural language prompts. The created draft is then refined by the AI by adding comments to elements just like you'd be collaborating with a design team in Figma. And the best? It works both with managed AI endpoints from OpenAI and self hosted models available via Ollama.

Step 1: Create workspace

If you haven't created an account navigate to codesphere.com and sign up. Next you want to create a workspace, if you plan on using a self hosted model via Ollama it needs to be equipped with one of our GPU plans. Otherwise a smaller plan should be sufficient.

Zero config cloud made for developers

From GitHub to deployment in under 5 seconds.

Review Faster by spawning fast Preview Environments for every Pull Request.

AB Test anything from individual components to entire user experiences.

Scale globally as easy as you would with serverless but without all the limitations.

Step 2: Install Ollama

If you want to use OpenAI instead of a self hosted model you can skip this step.

We will need to follow a slightly altered version of the official installation guide as we need to install Ollama in our local home/user/app directory. Open a terminal an type:

sudo curl -L https://ollama.com/download/ollama-linux-amd64 -o /home/user/app/ollamaThen provide execution rights to the downloaded file via:

sudo chmod +x /home/user/app/ollamaStep 3: Clone OpenUI Repository

Next get the source code for the UI interface builder.

git clone https://github.com/wandb/openui.gitThis will create a new folder in your app directory with the frontend and backend code for the UI builder.

Step 4: Install required Python version and configure virtual environment env variables

Codesphere pre-installs Python but for this project we will need a newer version. Firstly we need to configure our pyenv - this will make sure our installed Python version is persistent across workspace restarts.

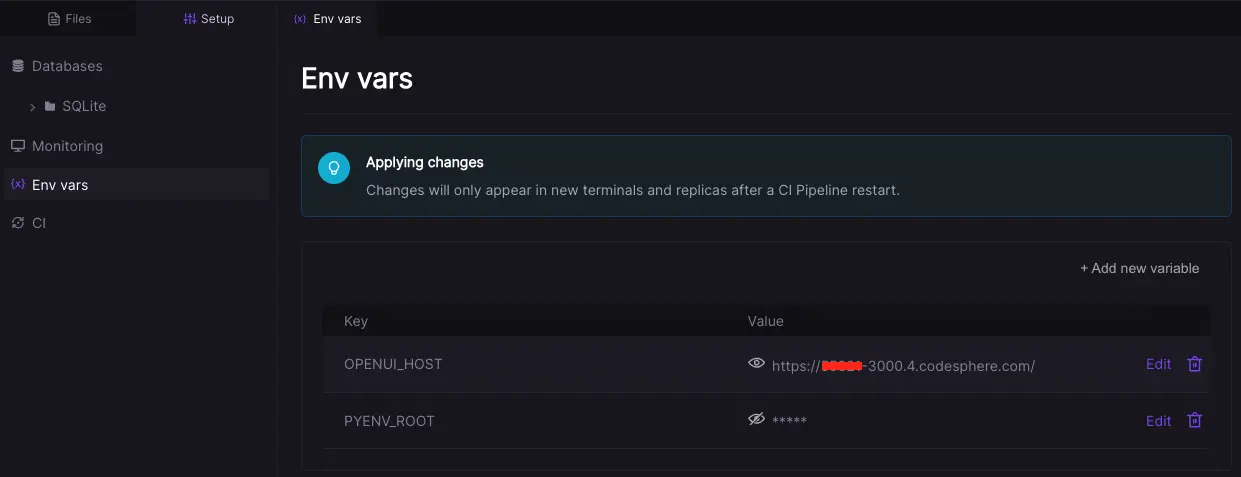

- Navigate to setup/env vars and set

PYENV_ROOTto/home/user/app/.pyenv - Grab your workspace dev domain via

echo $WORKSPACE_DEV_DOMAINand copy to clipboard - Set

OPENUI_HOSTto the value you just copied - Set

OPENAI_API_KEYtoxxx(for local Ollama models) or to your actual Open AI API key - Set

OPENUI_ENVIRONMENTtolocal(please note for production use you'll want to set this toproductionand set up the GitHub SSO app for session handling) - Open a new terminal (existing terminals don't get updated env vars)

- Type

pipenv install --python 3.10.0and confirm withY- this will take a few minutes - Activate with

pipenv shell

Verify that your Python version is 3.10 via python -V

Step 5: Install dependencies for OpenUI

First let's build the frontend artefacts. In your terminal navigate into the frontend directory with:

cd openui/frontendThen run the installation and build.

npm install

npm run build -- --mode hosted

Now navigate to the backend directory (assuming your still in the frontend directory) with:

cd ../backendNow install the Python dependencies via:

pip install .Step 6: Adjust port and host

Currently OpenUI does not support changing the port via a command line flag or similar so we will need to do a small change to the source code.

Open the file /openui/backend/openui/__main__.py and change line 55 to:

host="0.0.0.0",and line 57 to:

port=3000,Now save the changes with command+s.

Step 7: Download model & run Ollama server

Navigate to the root directory via:

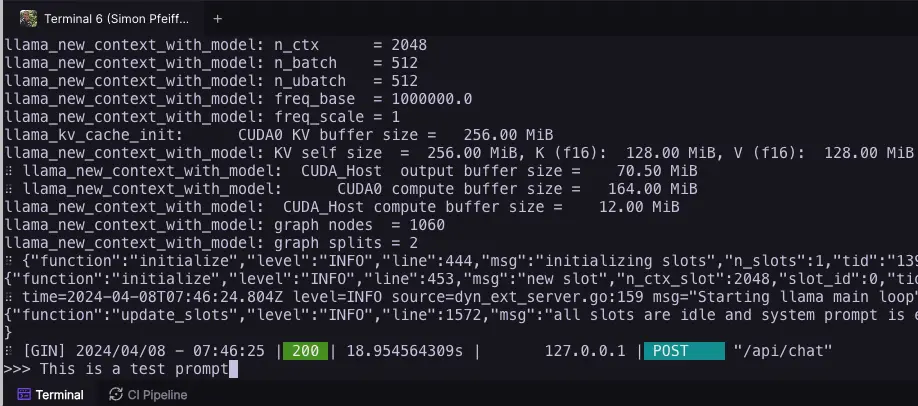

cd /home/user/appAnd launch the Ollama server via:

./ollama serve&./ollama run llavaThis will download the model (takes a few seconds) and start a prompt shell, to verify it is actually working type a prompt and see if it will produce output. If it works exit the prompt context via control + d (mac) or strg + c on windows.

Step 8: Run the UI builder and enjoy!

Make sure your still inside your pipenv and then in the same! terminal now type:

cd /home/user/app/openui/backend

python -m openui