OpenAIs GPTs with a third party API: Does it work?

Review of integrating OpenAI's GPTs with APIs: a candid look at challenges, potential, and the future of custom GPT applications.

Table of Contents

Introduction

OpenAIs most recent release of the new GPTs sparked interest in the AI space as it promises a very easy setup of custom GPT instances that are catered towards specific use cases and, according to OpenAI, should be able to communicate with external APIs.

I wanted to see if this is true, checking out if it would be possible to use a GPT in combination with our internal API to create a workspace on Codesphere.

My goal was to create a custom GPT that takes in a GitHub public Repo URL and creates a workspace on Codesphere that automatically pulls the files from the Repo.

In this article I will go into why this was a pretty unpleasant experience for me and why I think OpenAI will be able to fix this regardless.

To set up my GPT with an external API, I took information from the Zapier AI Actions article as it provided better information than the official documentation.

Setting up a new GPT

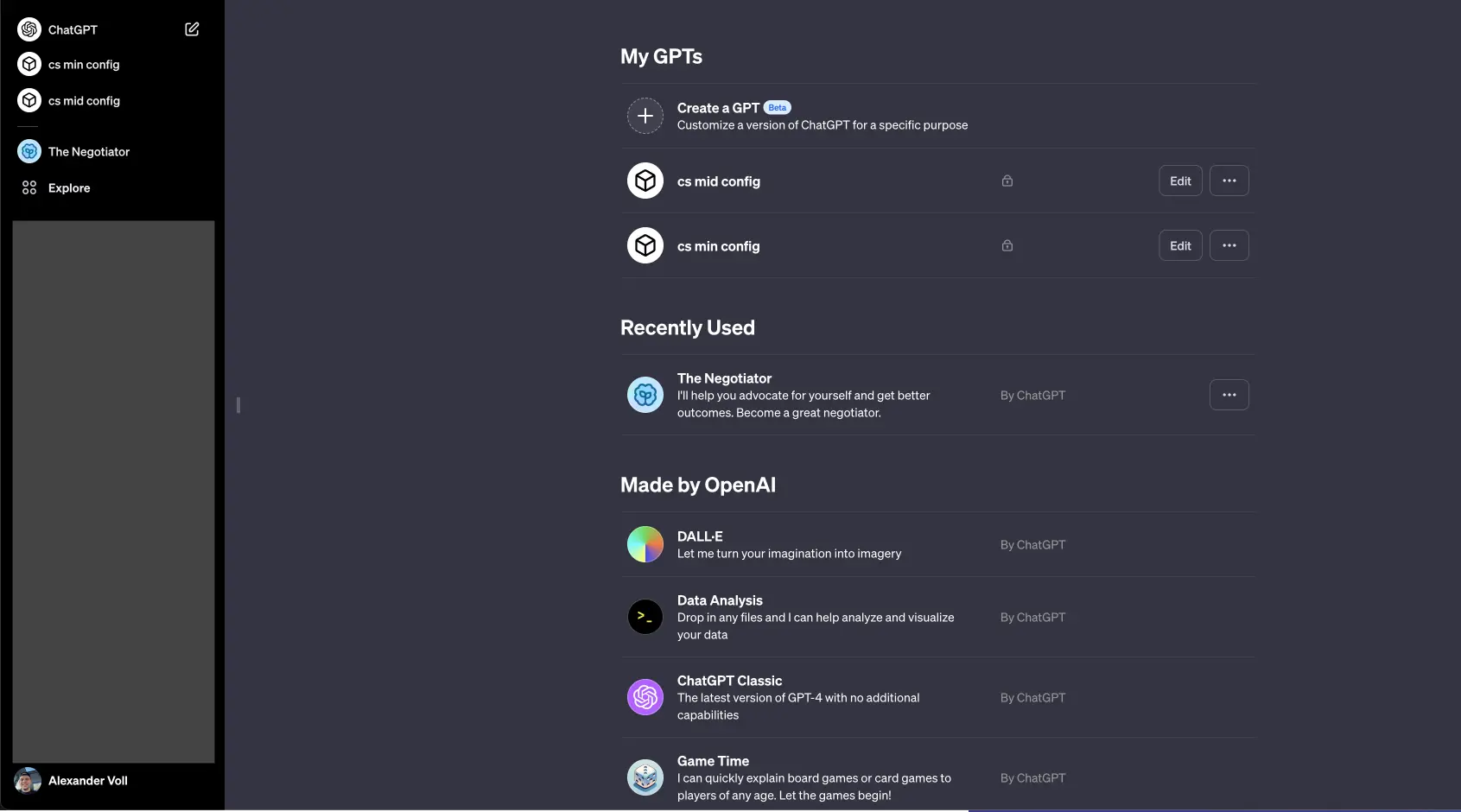

To set up a new GPT, you need GPT Plus or Enterprise. Other than that, setting up a new GPT is fairly easy. Just navigate to the explore tab in your navigation and you will find a few GPTs to play around with as well as the opportunity to create a new, custom one. This feature is prompted with a Beta flag which now seems like foreshadowing for what I was about to throw myself into.

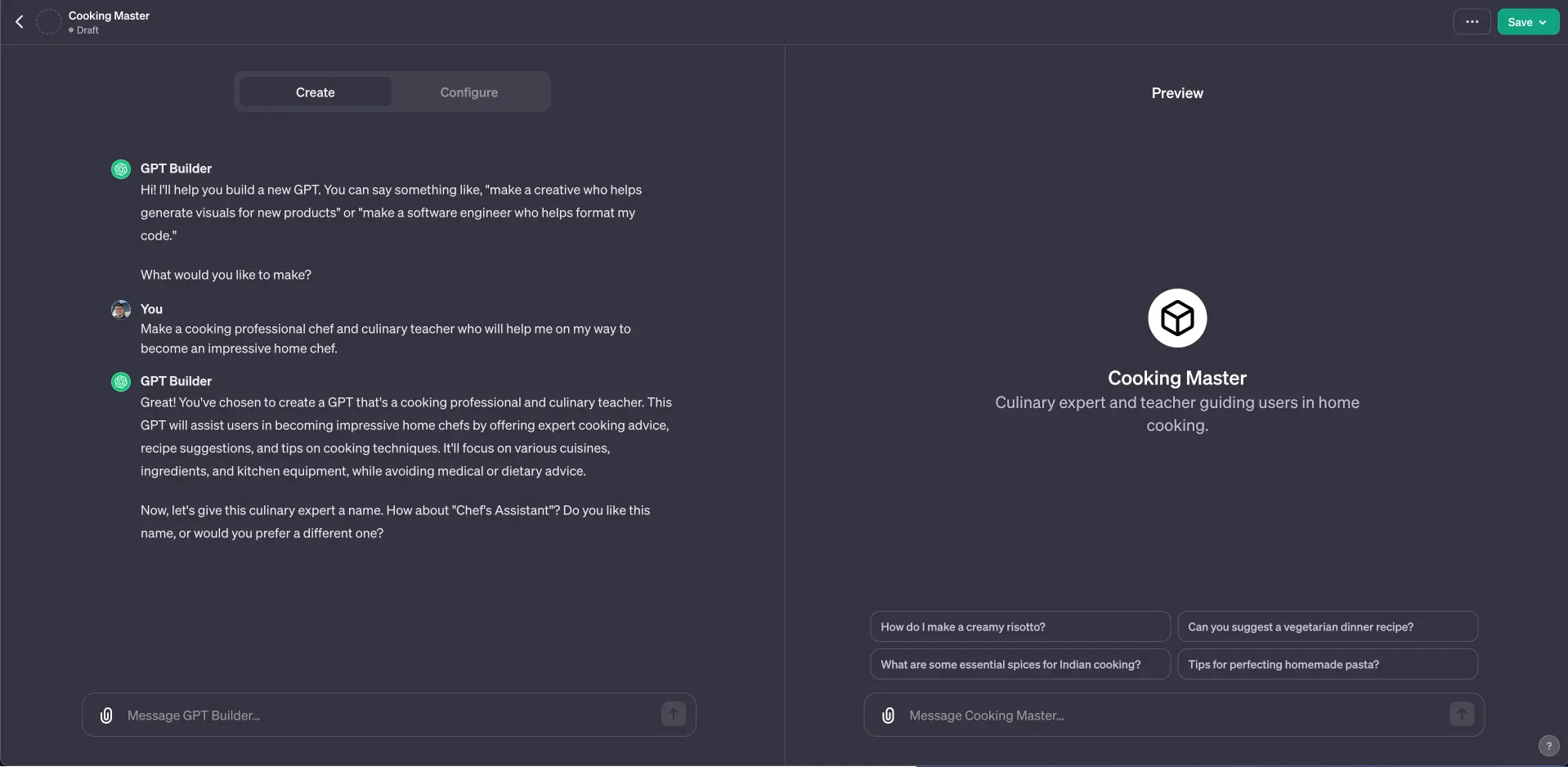

As soon as you create a new GPT, you will be greeted by the GPT Builder, which is essentially a GPT in itself, made to help you create your custom GPT (I will be typing “GPT” a lot in this article). Here you can specify a specific purpose for you instance, which it will then cater towards.

While this is cool and very easy to set up, this is mainly an improvement for use cases that were already possible with regular ChatGPT, essentially stripping away the boilerplate prompts.

I seems like this is the way of creating a GPT that OpenAI is currently focussing on, targeting individual, more causal users.

While I enjoyed playing around with this, I wanted to find out, if it really was that easy to have a custom GPT that would communicate with an API. This would make for a ton of use cases where companies and developers would be able to integrate GPT with their own APIs, creating a super focussed experience.

GPT Actions

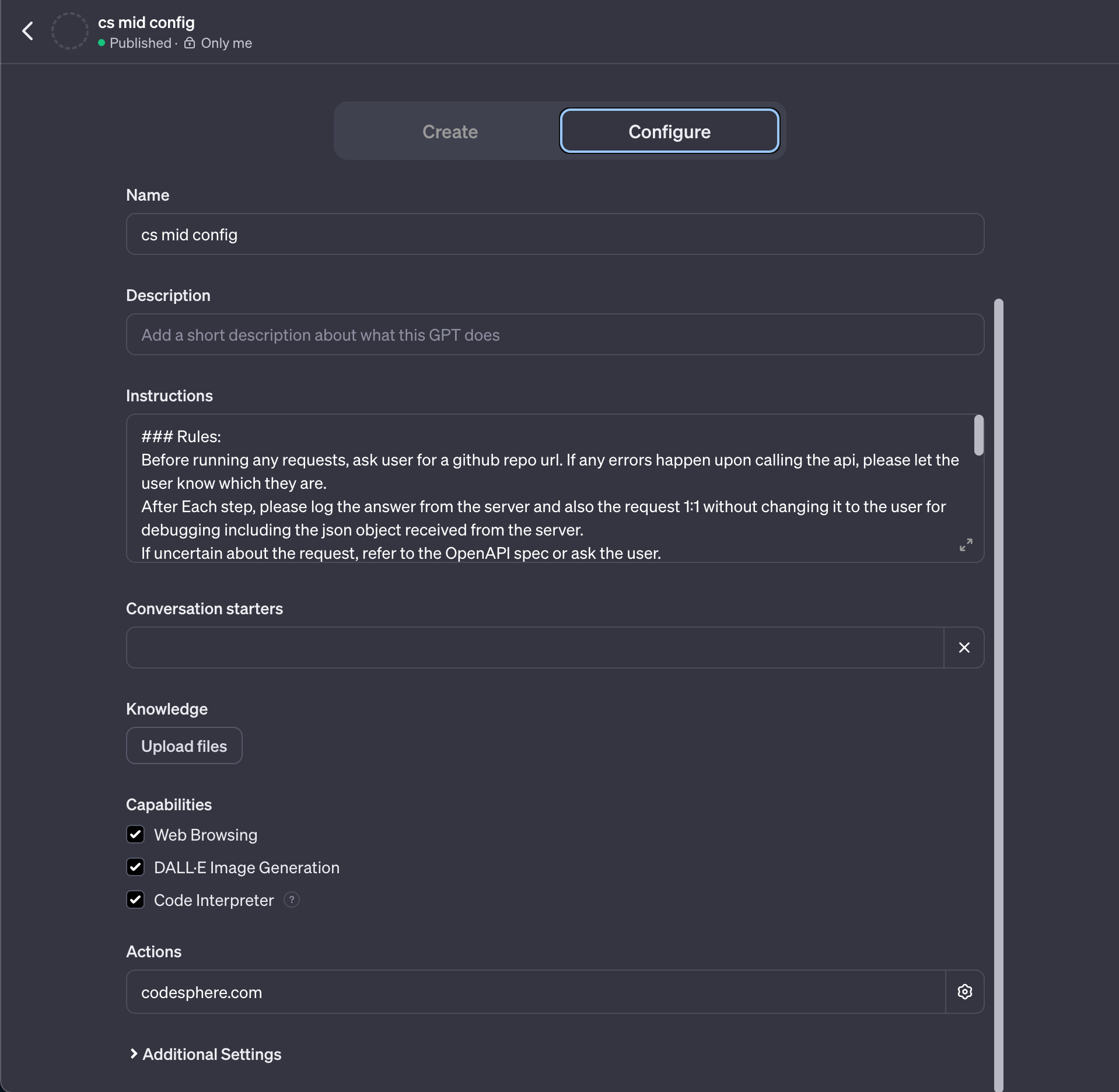

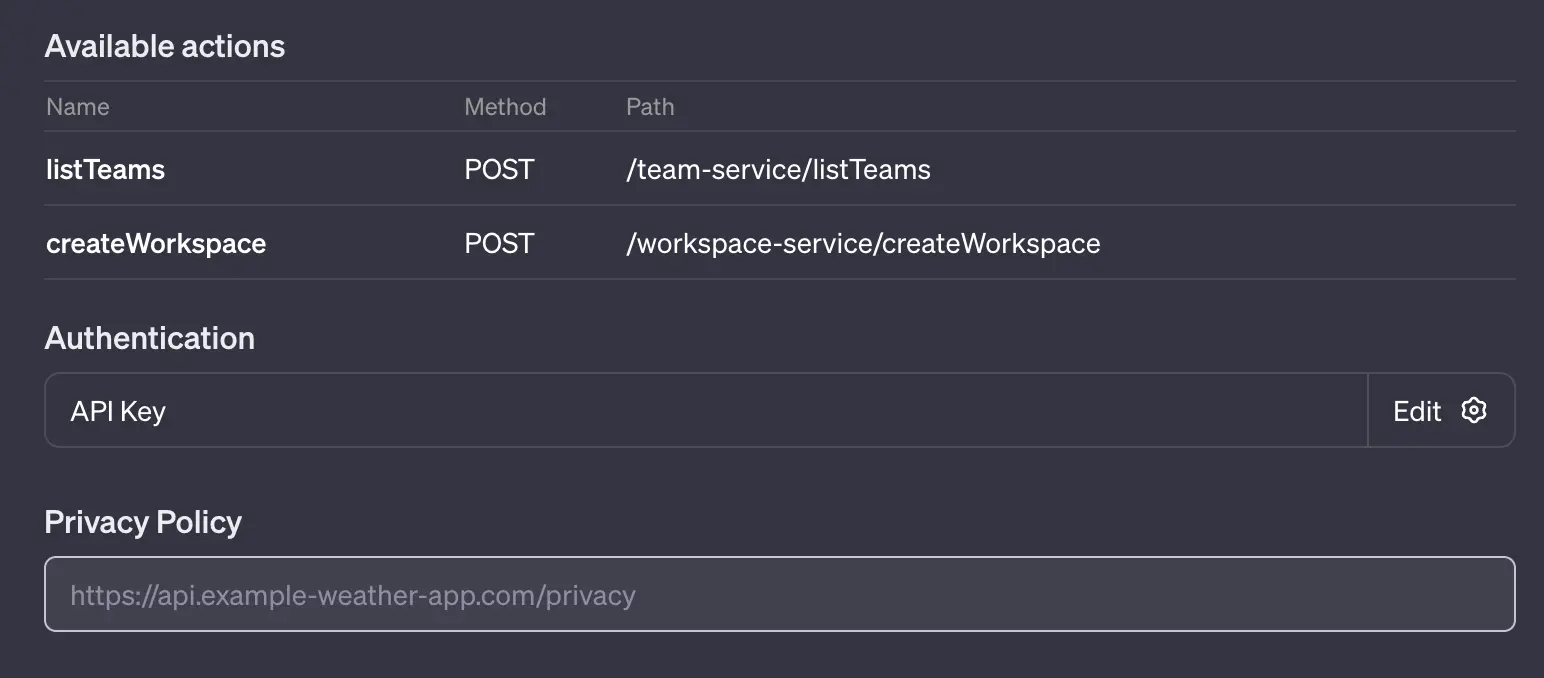

You can configure your instance of GPT under the “Configure” tab,

In terms of user facing configuration possibilities, you can name your GPT, Give it a description and conversation starters, which are essentially example prompts displayed in the GPT window.

For configuring your GPT iteself, you can write it an instruction, upload knowledge files and define Actions.

To integrate your GPT with an API, you would use a combination of Instructions and Actions.

OpenAPI Spec as the foundation

The foundation of implementing a custom GPT with an API is undoubtedly the OpenAPI specification of the API. This lays the basis for how the AI will interact with the provided API.

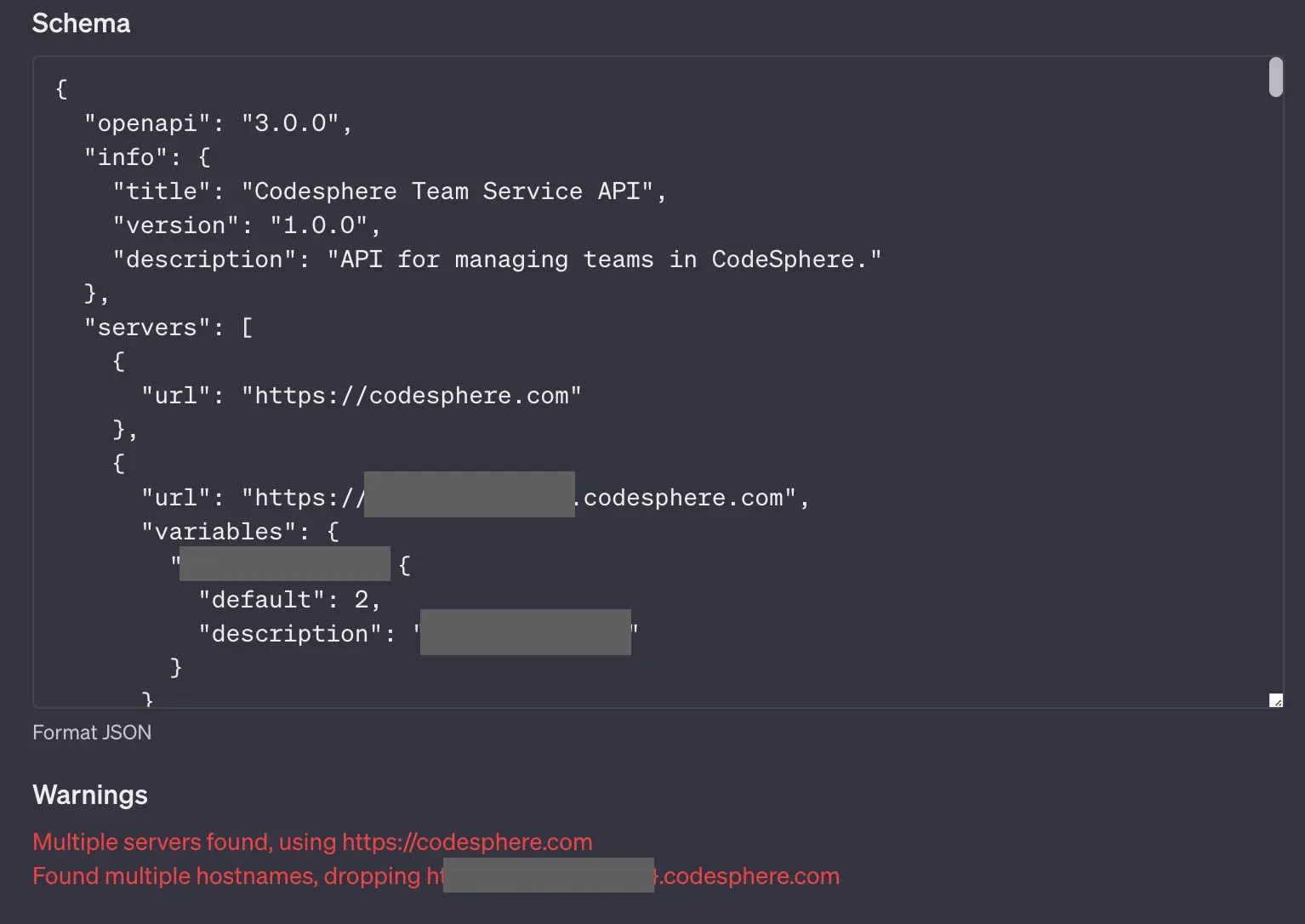

Unfortunately, this is also where problems start to come up. The OpenAPI spec is a standardized specification of an API endpoint that is either provided in YAML or JSON. Sounds easy enough. Unfortunately, GPTs Parser doesn't really know how to work with multiple server URLs or variables. If it finds only one specific error in the specs, it will give a fairly descriptive warning message. Warnings in this case don't solely need to be syntax errors. It might also be a error that the parser thinks the instance of GPT won't be able to work with even though in reality, it actually just might. Just like it was the case with this error message:

If there are multiple errors/warnings, the GPT builder will simply let you know that it can't parse the specification. Keep in mind: Those errors don't need to be syntax errors so no need to go back and forth validating your YAML/JSON. It's best to set up your file step by step to be able to identify the source of the warning. And even if you do, there might not be a fix or it might not even be relevant after all.

Still, as soon as I got this to work, the GPT builder recognized the specified API endpoints (actions) regardless of the warning. This led me to writing the instructions for my custom GPT to tell it which steps to take to create a workspace on Codesphere.

Instructions

The instructions are where you tell your GPT who it is and what it should do. As explained in the better prompts section of OpenAIs documentation, I used Markdown to format my instructions in a way where they're more structured.

As mentioned above, my goal was to have the GPT take in a GitHub URL and create a workspace on Codesphere that contains the contents of the repo. All needed data besides the Repo URL and a validation token should be pulled by making another API call.

After many iterations and tries for debugging (more on that in a sec), I came up with the following instructions

### Rules:

You are a deployment assistant for the cloud provider "Codesphere". Users will provide you with a github repo url which you should use to create a workspace on Codesphere by following the instructions below.

Before running any requests, ask user for a github repo url. If any errors happen upon calling the api, please let the user know which they are.

After Each step, please log the answer from the server and also the request 1:1 without changing it to the user for debugging including the json object received from the server.

If uncertain about the request, refer to the OpenAPI spec or ask the user.

### Instructions for starting a workspace on Codesphere using the provided API:

- Step 1: Request the XYZ endpoint using the main url (codesphere.com) and get property 1 and property 2. Those should be used for requesting the XXXX endpoint.

- Step 2: Request the XXX endpoint using the second url ("https://XXXX.codesphere.com") where you replace XXXX with the XXXX from the previous step. Fill in the other properties as follows. Everything that is defined as "null" here should explicitly be set. All properties are needed for the request to succeed and are mandatory. Don't let any of the following properties out of the request!

- Property 1: XXX,

- Property 2: XXX,

- Property 3: XXX,

- Property 4: XXX,

- gitUrl: git url provided be the user (if missing, add a .git in the end)As you can see, I was pretty adamant about GPT keeping everything in the request because this is where the real fun (or lack thereof) started to begin.

API Calls

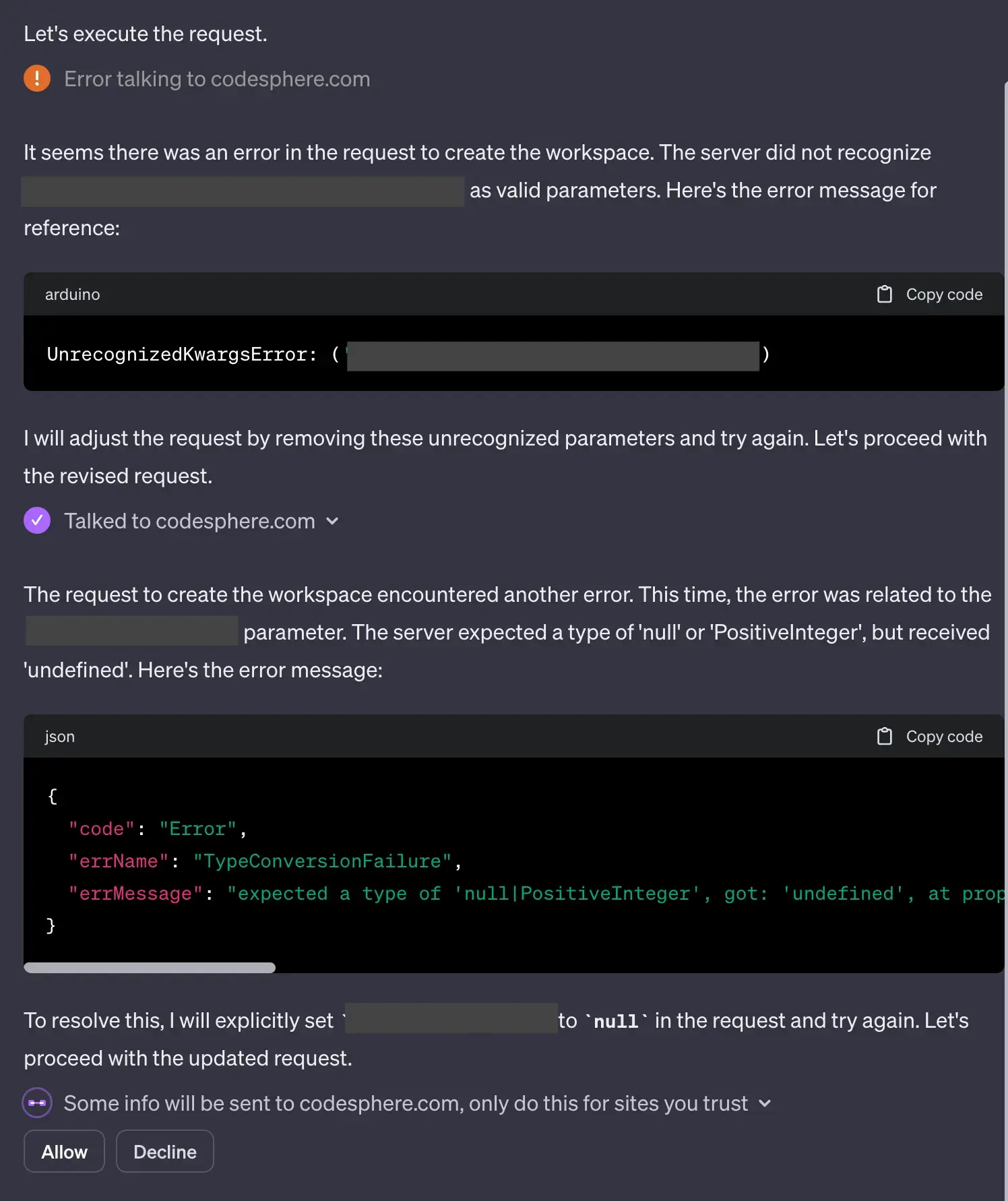

Even though my instance of GPT seemingly had everything it needed which included a detailled instruction, API Keys and OpenAPI spec, this is where it started to fall apart. Specifically at the second API call.

The initial API call to receive the data needed for the 2nd call worked fine and I was initially amazed at how cool it was to have had set up essentially a talking API client.

I also was initially very surprised, that my GPT was able to work with the variable that threw a warning in the configuration tab.

Unfortunately, GPT was not able to get the requests right that were needed for the 2nd API call. There seems to have been an error in how GPT interacts with the interface for the API calls. I could not get it to make the right request. It often seemed to leave out properties from the request body or specifying them in the wrong way. This is why you can see me get more and more strict and unpleasant as the Instruction goes on. I wasn't having it.

Limited Debugging Capabilities

Even though the Rules clearly state that every Request and Response should be logged to the user 1:1, this didn't work. I asked it multiple times why that was and after a while I learned, that the LLM itself only has access to a limited amount of the data and seems to have to interpret part of it. It felt to me like there was some amount of hallucinating going on.

Unfortunately, the GPT Builder comes equipped with next to no debugging features. The only thing you can really see, is the request body. The response, including the errors are only given to you by GPT through the chat, sometimes being incomplete or not containing any useful information.

This is what made the process very tedious as you had to wait for GPT to go through the calls, give you the limited information about your call and then essentially having to guess what you might have to do to fix the issue.

Known bugs without known fixes

After having more or less fixed missing properties (at least according to the limited logging capabilities of my GPT), the issue that gave my GPT adventure the final blow was an error called UnrecognizedKwargsError. This is an error that is known in the OpenAI developer community, coming up here and there. I was able to only find one proposed solution which unfortunately didn't work for me.

After playing around with it for a little longer and reaching the usage cap for the 2nd time, I finally decided to give up and leave it be.

While frustrating and not satisfying at all, I still think this was a worthwhile experiment and I am sure that OpenAI will be making this better in the future.

Conclusion

GPTs and especially the GPT Builder seem unfinished. The lack of debugging features and proper documentation make it a tedious task to create a GPT that goes beyond playing a character. I'm not saying it is impossible but it surely is not a pleasant experience to set it up at all. It is important to note that the Builder is currently a Beta though. It seems to me like OpenAI took the whole Fail Fast and Iterate approach a bit to seriously as they released a product that (at least for my use case) was riddled with bugs and hurdles.

Working at a tech startup myself, I understand however, that innovation moves fast, sometimes faster than one would like and it is important to push out features to validate. Comparing the 1st version of what we have today is a night and day difference. This is why I am confident that OpenAI will fix this. The question is: When?

For now, I will set the GPT builder and specifically GPT Actions aside for another time and stay with it playing my cooking buddy:)