Why virtualization software stacks are no longer mandatory

Why virtualization software like VMware is no longer a hard requirement for many companies.

Executive Summary

- Broadcom takeover of VMware eliminates many products and increases costs up to 6x

- Virtualization is only one of multiple ways to split physical hardware into usable chunks

- Containerization used to be challenging at scale but new technologies offer convenient solutions

Broadcom acquisition puts many VMware customers in a pickle

VMware was acquired by Broadcom last year and has since announced to deprecate 56 of its products including many of their perpetual license products. To put this in perspective the entirety of Google’s application software “graveyard” only contains 59 products - VMware and its new owner Broadcom is about to sunset almost the same amount of products in a single run. The motivation for doing so is to push more of their customers into the cloud offerings and increase subscription based revenue. For some customers this can lead to price increases of multiple hundred percent.

Virtualization is a deeply embedded technology - it is at the very core of many enterprise software systems. Even on typical hyperscaler clouds you are still running on virtual machines behind the scenes just that the hyperscaler runs the virtualization layer (called hypervisor) for you. VMware plays a key role here, they are one of the leading providers of hypervisor technology and are especially popular among big and medium sized companies.

In theory the gains from virtualization (running multiple services on each piece of hardware) can be achieved with any hypervisor provider. There are quite a few open source alternatives available that all solve the same underlying problems (XCP-ng, Proxmox, OpenVZ etc.). Switching itself is usually also not the main challenge either (unless migrating thousands of VMs) however afterwards the real effort begins. The details will be different between the tools and you have to learn how to work with the new system, troubleshooting issues and understanding the intricacies is going to be really costly for companies. Network handling, hardware support, drivers and maintenance cycles will be different. It’s also hard to predict how stable a new system will be without extensive tests. On top of that all VMware certificates that teams acquired are now obsolete. Overall not a very nice outlook for anyone who now has to migrate away from VMware because they are not willing to follow their direction into the cloud.

On top of all this, the change is unexpected, as these perpetual license systems were not supposed to have an end of life this soon or at all. The forced timeline introduces security risks and puts strains on already allocated budgets. Keeping existing VMware systems running is often not an option either as support will run out and licenses will not be renewed. On the other hand, moving to the cloud offerings of VMware comes with its own set of challenges: Some products don’t have a cloud equivalent or are not fully compatible and in almost all cases the price will go up significantly.

Self hosted open source software might not be viable as alternatives even if all technical challenges can be solved. Managed software vendors like VMware offer SLAs and support which is an important component in ensuring compliance and diversifying downtime risks via external experienced support. Companies use this to contract out some of the liabilities associated with running these clusters. And companies are willing to pay hefty sums in order to have this peace of mind. They want to have someone on the other end of the support line if they encounter an unsolvable problem. Some verticals like government contractors are even obligated to show these types of support systems in place for their audits.

But why is virtualization such a central part and what are potential alternatives and solutions for businesses? Let’s take a look at how virtualization fits in with the grander scheme of hardware and software orchestration.

From bare-metal to the application layer - virtualization, containerization and more

Generally speaking no matter whether your software runs on-premise or in the cloud the hardware servers underneath look rather similar. The main difference is where these servers are physically located and how distribution is handled.

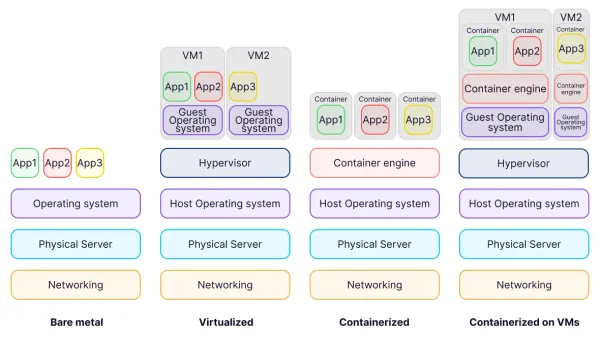

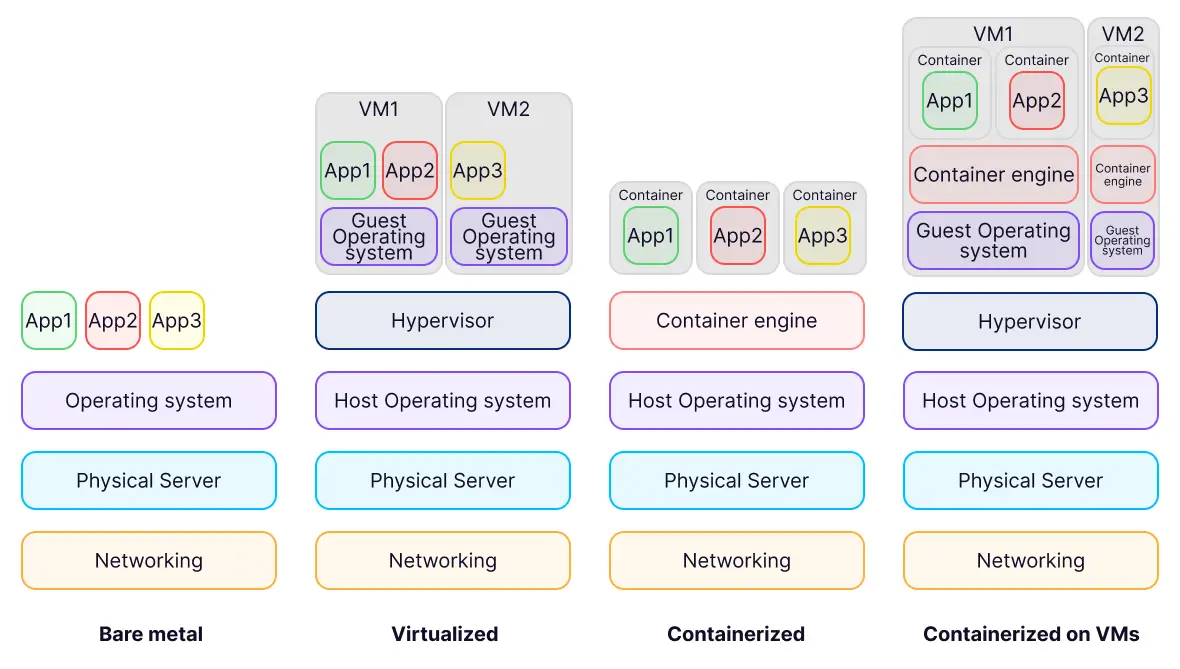

In order to understand the challenges of the Broadcom VMware acquisition it is important to understand the steps needed to turn physical hardware into a usable or even cloud ready entity that can be distributed and used for your applications. The lowest layer is called bare metal - it is your physical hardware server or rack of servers. In order to run software on that you will need at least some networking layer and an operating system per machine. Now if you plan on running a single application per physical server, you’d be done at this point. This used to be the standard in the early days of enterprise IT, however it is rather inefficient and expensive to have one physical server per application. Running multiple applications on a single server and operating system introduces risk of uncontrollable resource conflicts and incompatibilities between applications.

Virtualization allows you to overcome this challenge. It has been the de-facto standard since the 1980s. Using a hypervisor you can take a single physical machine with an operating system and run multiple virtual machines (VMs) with their own operating system on top of it. These virtual machines are well isolated, limiting the risks of conflicts between them. The hypervisor introduces an abstraction layer between the host operating system and the VMs operating system and it allocates the available resources (CPU, Memory and Storage) between VMs. VMs are not only used by enterprises on on-premise data centers it is also what powers most of the modern cloud. When you get computing instances from say AWS, most likely you will get access to a specific VM rather than a physical server.

Starting in 2014 with the introduction of Docker another approach gained popularity. Containerization (also called OS-level virtualization) also divides individual pieces of hardware into smaller isolated chunks so that applications can run without interfering with each other. Unlike VMs though, not every container needs its own operating system. They use a shared operating system (also called container engine) and only simulate the appearance of an operating system to the applications running inside them. This makes them more lightweight and less resource intensive as VMs. It also makes it easier to scale the size (vertical scaling) and number of machines (horizontal scaling) available to the applications.

Containerization and virtualization can also be and often are combined. With this approach you install a container engine inside of a VM. This is often used when building a cluster or datacenter on top of a cloud subscription. For cloud providers this is easier than providing access to dedicated bare metal machines as the VMs are not bound to a specific machine and the cloud provider can allocate available resources easier across customers.

VMware is most popular for its hypervisor virtualization stack that is widely used for enterprise use cases and the adjacent products that make using the VMs more comfortable (i.e. tools for migrating VMs from one hardware to another).

Now that VMware has announced it’s no longer going to provide installable offline licenses for their hypervisor technology in favor of their hosted and subscription based cloud hypervisors should all affected companies start looking for alternative hypervisor stacks? In short, no!

As the previous chart shows, while it’s possible and common to run container engines on VMs, they are in no way a requirement. Modern container orchestration with Kubernetes and many derived technologies can be installed directly on bare metal (see column 3 of the chart above). In this scenario the hypervisor level is skipped. One challenge remains however: Not all applications are ready to run in barebone containerized environments out of the box - many companies have started “containerizing” their applications but these migrations are typically multi-year endeavors and often far from finished. Are those companies then forced to continue working with hypervisors for the foreseeable future? No - luckily that is not the case.

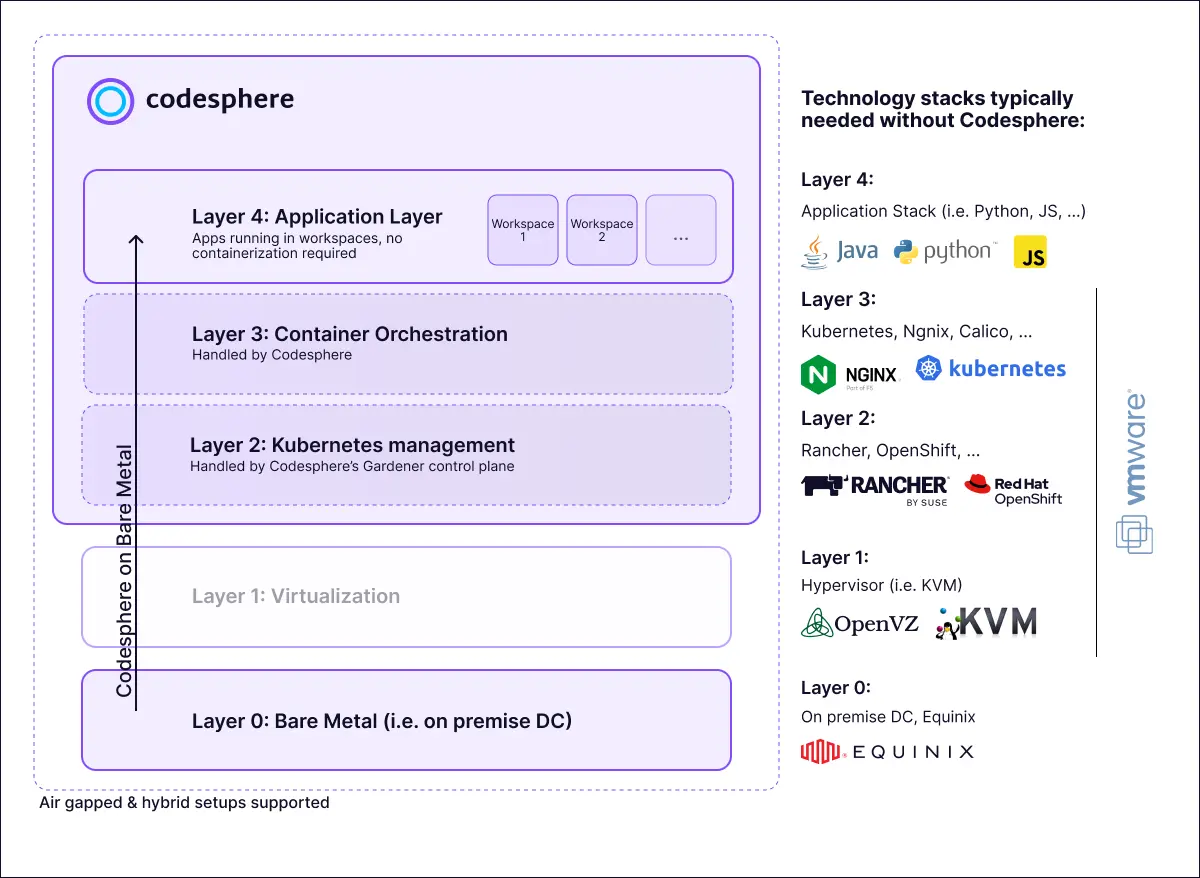

While pure Docker based solutions required applications to be packaged in a certain way (hence the “containerization” projects), new technologies are on the rise that turn containers into fully usable “machines” that do not require applications to be “dockerized”. Codesphere, for example, provides so called workspaces - these are technically containers (with all the benefits and more) but behave very much like an actual machine. You can install and run any software directly like on a local machine, no need to package or containerize your applications.

Why containerization is challenging used to be challenging

Now it has been possible to turn containers into usable machine like environments for a while and there are many established enterprise software providers that help companies manage Kubernetes at scale (i.e. Rancher by Suse or OpenShift by RedHat). Setting these systems up however has been far from easy. Unlike VMware that offered all-in-one solutions these providers require plugging many different components together and maintaining a highly complex system over time. It requires experienced DevOps or Ops engineers and projects to set up production ready clusters take multiple months or even years.

Additionally the developer experience within such systems is suboptimal. Developers who are building the software that is ultimately running on this infrastructure have to keep up with the complexity. When they encounter certain issues they often need help from more experienced DevOps colleagues for debugging, this slows teams down and creates bottlenecks.

Codesphere’s solution covers the entire software lifecycle, similar to VMware’s solutions, and solves many problems that used to be associated when trying to run Kubernetes at enterprise scale. Codesphere’s deployments offer all advantages of containers (i.e. they are scalable and lightweight) plus additional advantages like our patented cold-start technology that allows containers to be turned off when unused and reactivate within seconds when needed. This gets embedded in an end-to-end frontend that simplifies working with the system to a point where DevOps becomes obsolete. Developers can perform and maintain all their hardware requirements from an intuitive UI in a self service manner.

Codesphere offers a cloud based PaaS model, but especially larger clients appreciate the fact that Codesphere can be installed on custom data centers too. Installations are possible on bare metal, on virtualized machines or on a managed Kubernetes and can run air-gapped or openly or in hybrid combinations. We have enterprise clients using this to orchestrate both development and production environments, on premise, on (private) cloud subscriptions or in one of our own PaaS data centers and are growing rapidly. Please get in touch if you want to learn more.