Generative AI: How Self-Hosting Can Help Your Business

Self-hosting generative AI can make a huge impact on business workflows. Here is how you can get the maximum out of it.

There is a lot of uproar about AI and its implications around the world. We do not believe that AI will be sentient anytime soon. Some of the hype around AI is just that, hype. However, companies need to understand the true effect of AI to stay ahead in the competitive market. Organizations should try to explore the possible use cases of generative AI and the way it can help their businesses.

We are going to discuss what artificial intelligence is and how generative AI has developed to its current state. We will also talk about the ways businesses can integrate self-hosted LLMs in their workflows and how Codesphere can help. So, let’s dive right in.

What is AI?

Artificial Intelligence is a field of computer science, that has gained insane popularity in the last two decades. AI tries to build a machine capable of making human-like intelligent decisions. AI systems are designed to analyze, reason, learn, and adapt. It allows them to perform tasks, such as problem-solving, language understanding, and decision-making.

An AI model is basically a program that can be trained on data and make predictions or decisions based on it. The field of AI encompasses an array of technologies including machine learning, deep learning, natural language processing, and robotics. AI's potential is vast, and it continues to shape the future of technology. AI offers solutions to complex problems and unlocks new horizons in automation and decision support.

What is Generative AI?

Generative AI is a sub-field of artificial intelligence where algorithms are used to generate plausible patterns for things like language or images. The general narrative about generative AI is that it makes machines able to come up with original ideas, like writing stories or making art. For example, it can write stories or generate images without being given specific examples. However, in reality, these machines are basically extremely efficient at recognizing patterns from the data they are trained on and recreating similar patterns. Generative AI is often used for things like making chatbots, translating languages, and creating content.

History of Generative AI

Much like every other field, generative AI has also had gradual and at times abrupt advances. If we try to trace back the history, we can see that it has been around since 1913, when the Markovian chain algorithm was developed. It was a statistical tool to generate new data. Here the input defined how the next sequence of data would look like.

However, the more useful generative AI era began after 2012. It was then we finally had powerful enough computers to train deep-learning models. These models were trained on large data sets. Deep learning models considerably improved many organizational tasks like language translation, image-to-text, and art generation.

In 2014, a game-changing algorithm by Ian Goodfellow was released. The development of the Generative Adversarial Networks enabled the deep learning models to self-evaluate using game theory. It’s said that Generative Adversarial Networks (GANs) gave computers the ability to be creative while generating content.

Innovations in Generative AI

Shortly after the GANs, another technique called transformer networks came. This technique was based on the idea of attention. It gave an “attention score” to different parts of speech. It immensely improved AI models in understanding and generating content. This technique combined with the power of deep learning, gave birth to Large Language Models that we see today.

What are LLMs?

Large Language Models (LLMs) are complex deep learning models that are trained on huge datasets. They have ability to understand and use human language in a really advanced way. They can effectively perform tasks like text generation, language translation, chatbot development, and content summarization. These models are highly adaptable and efficient, which is why they are important in the development of advanced AI-driven solutions for various industries.

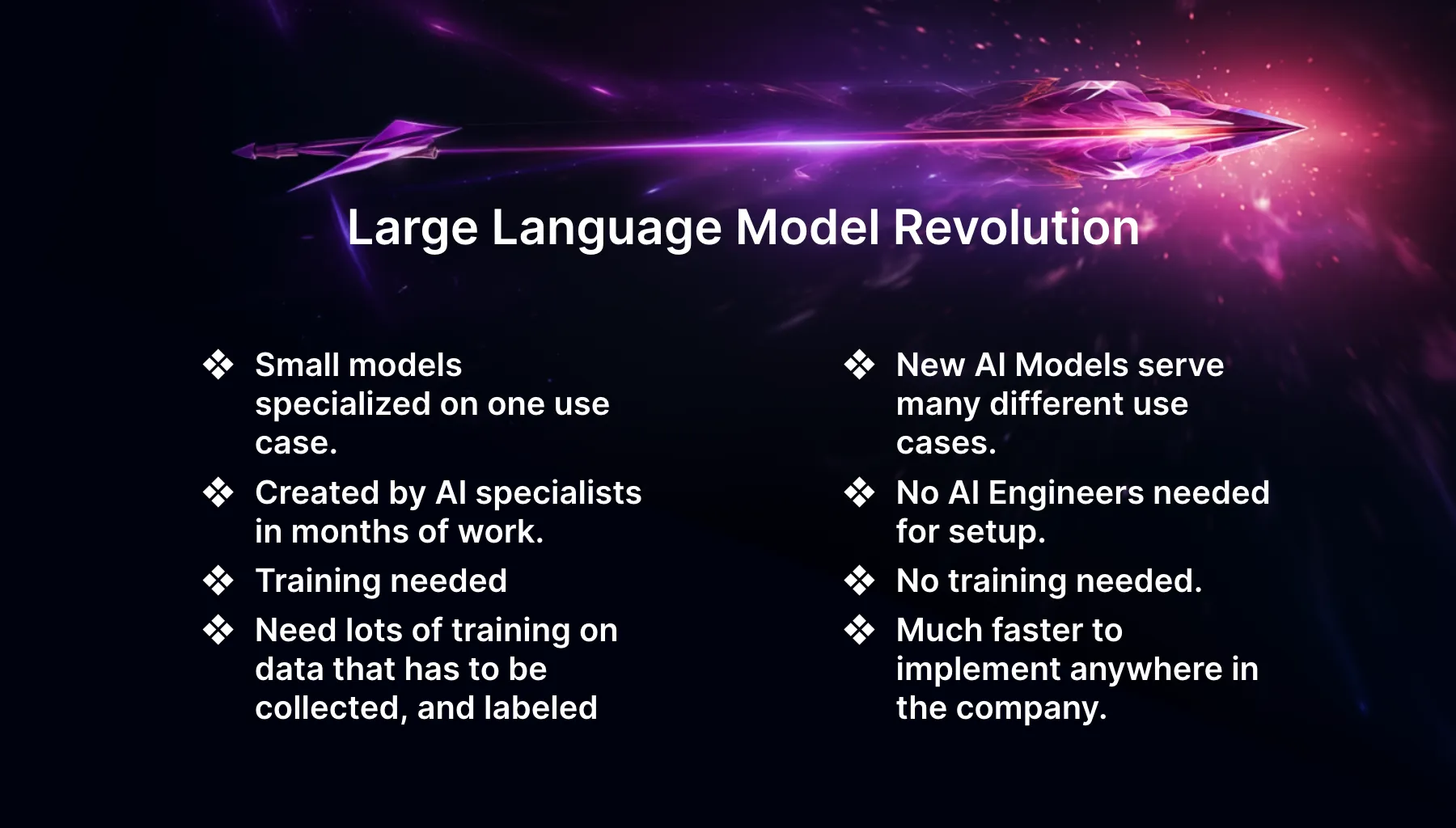

Large Language Model Revolution

Previously there were a lot of smaller language models that were specific to different industries. These models needed months of work by AI experts. They were also extremely resource-intensive and time-consuming as they also took a lot of time to train. Moreover, the data required to train these models needed to be gathered, labeled, and prepared.

So the innovation of large language models made this technology general and easier to use. LLMs didn’t serve a specific field, so they could be used for several use cases. They didn’t require you to gather labeled datasets and specialized engineering capabilities. This made them reliable and robust to be used anywhere.

However, LLMs needed extremely high computing power to train and were expensive. This meant the big tech had a monopoly and the only option to use them was through the provided APIs. Many organizations could not use them because of several issues like lack of control over models, data protection, and data sovereignty.

Things took a turn after Meta released LLAMA-2. It made top-notch LLM models available to everyone that could be used by anyone without compromising data integrity. The open-source community has been since rolling out many updated versions on a daily basis. Now, you can find a ready-to-use, fine-tuned version for almost any niche or use case.

Suggested Reading: 7 Open Source LLMs you need to know about

How Can You Use LLMs?

Now you may ask how to use LLMS on an organizational or business level. Let’s talk about different ways to do that.

Prompt Engineering

One way to work with LLMs is prompt engineering. It involves asking LLM the right questions. You can test different models rapidly using prompt engineering. So, It is a fast and efficient way to get the results you want. You can use prompt engineering with almost any AI web interface (i.e. also ChatGPT).

Nonetheless, there are drawbacks to consider, especially when using managed services like ChatGPT. It sends out data to OpenAI and the likes, which is not suitable for sensitive data. The other downside is that the prompt you can send out cannot be more than 2048 words, which can be very limiting for some use cases.

RAG (Retrieval-Augmented-Generation)

Retrieval Augmented Generation known as RAG is basically an AI framework. It tries to improve the quality and accuracy of responses generated by LLMs. This framework allows you to integrate an external (or company internal) database, which your LLM can fetch information from.

How it works is, that when a query is made, RAG first searches the database to find relevant information. The retrieved information is then used to craft a coherent response to the query. This makes these models factually accurate and relatively fast to implement.

Although, you will still need to deploy your own LLM if you don’t want to share your data with OpenAI. Also, whether you use self-hosted LLMs or API-based you would require some ML Ops or DevOps engineers.

Fine-tunning

This approach involves improving or customizing an existing LLM model with your own unique data. Multiple experiments have shown that smaller LLMs trained with specific data perform as well as or better than larger LLMs.

The benefit of this approach is that you get complete data autonomy. It also allows you to have complete control over your LLM model as it won’t get impacted by any updates of terms from an API provider. Moreover, on a large scale, this could be the cheapest possible solution for you. The downside to this approach is that you need your own data center and DevOps experts to implement these models.

Comparison of API-Based vs Self-Hosted LLMs

If you look at the benefits and drawbacks of API-based vs self-hosted LLMs, these are the points that will stand out.

API-Based LLMs Considerations

- You can start using them immediately and get a good performance from the get-go.

- The initial pricing for these models is low and you don’t need ML Ops engineers to set up.

- With managed LLMs you can only work only with very limited data, typically limited to a few thousand words only, even if data is injected via RAG and your company does not own the IP.

- You have to send your sensitive data over the internet to large (maybe foreign) companies.

- You do not have control over the model and can be affected by the changing updates (that can change prompt output quality for your use case), policies or terms of the service provider.

Self-Hosted LLMs Considerations

- These models are customizable and fast.

- You do not risk any data leaving the country where your data centers are located.

- You can work with unlimited amounts of data via fine-tuning.

- Self-hosted LLMs typically require expensive hardware, typically a few A100 GPUs that cost ~15k per month.

- These models also need constant infrastructure maintenance that can cost you around 20% of your project cost.

- You need expensive DevOps Engineers for the setup, hosting, versioning, and application layer around the model. In addition to that to evaluate, fine-tune and improve the model you require ML Ops engineers.

- After all these financial and workforce resources it can take up to 6 months until the infrastructure project is set up.

How Codesphere Helps Businesses Self-Host LLMs?

So, the two major issues when it comes to self-hosted LLMs are cost and complexity. Here is how Codesphere can help you navigate through these challenges.

Cost

Servers where you run LLMs usually have a fixed size regarding computing and memory. Running inference (serving requests/sending queries and getting an output) for AI models takes high computing resources per request. This is quite a bit higher than what a normal website takes.

In LLMs, a single request from a user takes multiple seconds and (almost) fully occupies one server core. If we want to serve multiple requests in parallel we need multiple servers or server cores (which refer to the number of virtual CPUs/GPUs in a server).

The traffic and the number of requests your model gets fluctuate and are hard to predict accurately. What happens usually is that organizations estimate the peak traffic and provision & pay the corresponding amount of resources to accommodate the peak hours. This for obvious reasons increases the cost.

Codesphere's patented technology allows “off when unused” server plans, which automatically turn off when you are not getting user requests. These servers have super fast cold starts and they can be up and running in (10-20ms in the future) 1-5 seconds currently. For example, if you have a chatbot for internal use in your organization, it will only cost you computing resources during office hours.

Our educated estimate suggests it is over 90% cheaper to run low-traffic models. Moreover, scaling your models can be 35% cheaper because you do not have to reserve the maximum computing capacity to accommodate peak traffic hours.

Zero config cloud made for developers

From GitHub to deployment in under 5 seconds.

Review Faster by spawning fast Preview Environments for every Pull Request.

AB Test anything from individual components to entire user experiences.

Scale globally as easy as you would with serverless but without all the limitations.

Complexity

Setting up an AI model is easy on Codesphere. It takes under two minutes to deploy an open-source LLM model like LLAMA2. You do not need to have DevOps or Machine learning engineers to set it up or maintain it. So, there are no maintenance costs.

If you want to fine-tune your model, that can be done by anyone. This can be achieved by fine-tuning your model with the help of services like chatGPT. Moreover, setting up the whole application follows Google’s software development standards. It is pre-set and can be used without any configuration.

Suggested Reading: Software Development: Moving Away from Sequential Workflows

Conclusion

Generative AI has progressed exponentially in recent years in terms of performance as well as availability. The open-source LLM models are at par with the API-based LLMs and much more beneficial in most cases. It is neither complex nor expensive to host your own LLMs anymore. It is a great development for businesses and organizations who want to use the technology without giving up data autonomy and control. Codesphere, effectively resolves the cost and complexity issues that organizations face when they opt for self-hosting.